What is Learning-to-Defer?

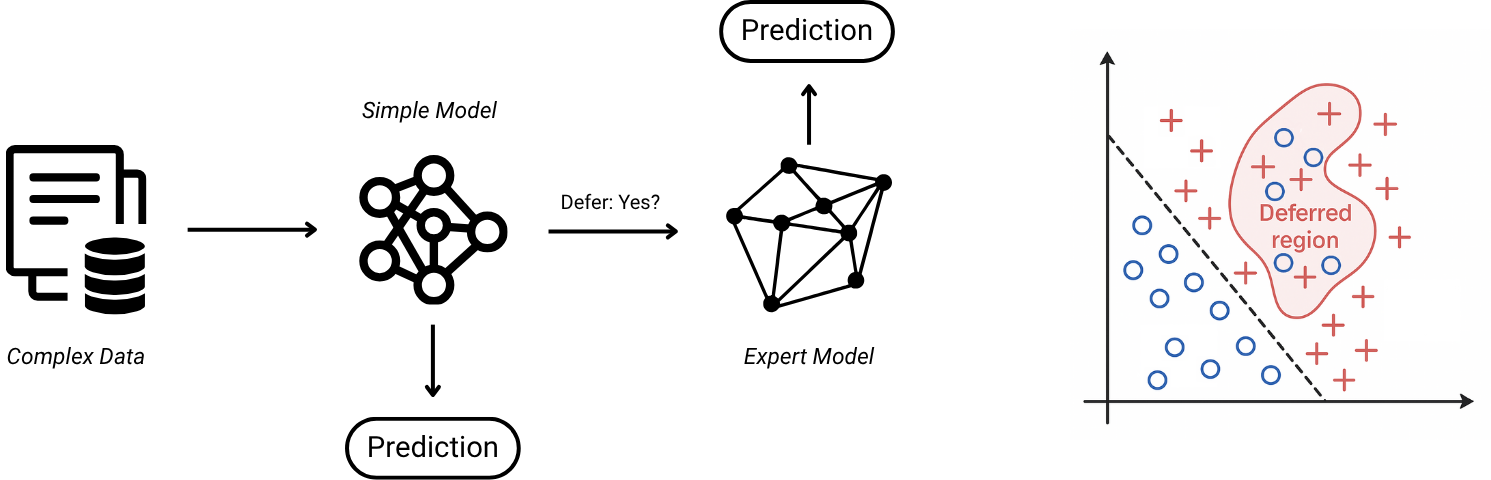

Learning-to-Defer is a decision framework where a model is allowed to say: “I will answer,” or “I will pass this to an expert.” The key is that deferring is not treated as failure—it is a deliberate action that can improve overall system performance when mistakes are costly or when some inputs require specialized expertise.

In practice, L2D turns prediction into a routing problem: for each query, route it either to a cheap/fast model or to an expert (a human, a stronger model, or a tool), trading off accuracy, cost (money/latency/human time), and risk.

My focus

My work studies L2D from a theoretical and practical angle: how to design routing rules with formal guarantees (e.g., consistency relative to the best defer/act policy in a hypothesis class), and how to make these systems reliable under limited feedback, distribution shift, and multiple experts.

Concrete examples

- Healthcare triage: a model suggests a diagnosis for routine cases, but defers uncertain or rare patterns to a clinician.

- Customer support: a chatbot answers common questions; it routes billing disputes or policy exceptions to a human agent.

- GenAI content assistance: an LLM drafts routine emails or summaries; if the prompt is ambiguous, high-stakes (legal/medical), or it detects low confidence, it defers to a human editor for verification and final wording.

- Finance (stock prediction/trading): a model issues a buy/sell/hold signal under normal market conditions; during earnings releases, macro shocks, or when uncertainty/risk is high, it defers to a risk manager (or a stricter rule-based guardrail) before any trade is executed.

Why this is useful

- Safer automation: reduce catastrophic errors by escalating the right cases.

- Efficient use of experts: spend human/compute budget only where it helps most.

- Measurable trade-offs: explicitly control accuracy vs cost vs latency.

- Guarantees (when assumptions hold): provide conditions under which the learned routing approaches an optimal defer/act policy.